What is AI literacy?

AI literacy is the ability to understand, use, and question AI in a way that’s both effective and responsible. It’s about grasping the opportunities AI offers, the risks it poses, and the harm it can cause if misused. It blends technical awareness with ethical and legal understanding, especially under frameworks like the EU AI Act.

What makes AI literacy nuanced is its several dimensions. AI systems don’t behave like traditional software, their outputs can shift even with the same inputs, which means users must learn to interpret results using tools like explainable AI.

The level and focus of literacy depend on the AI’s risk category, from low-risk chatbots to tightly regulated high-risk systems, each demanding different depths of knowledge. AI literacy must balance regulatory awareness with practical, value-driven applications.

In short, AI literacy is the mix of technical skill, ethical reflection, and real-world judgment that turns AI from a black box into a tool you can use with confidence.

How does AI literacy affect your organisation?

AI literacy determines how well your organization can use AI to its advantage. When employees understand how AI works, its limits, and its risks, they can apply it safely to improve workflows, decisions, and innovation. It also helps them spot errors or unexpected results, which is important because AI doesn’t always give the same answer for the same input.

The EU AI Act now requires organizations to match AI knowledge to each role, the context of use, and the people affected. Developers of high-risk systems need detailed technical and legal training, while other staff still need a basic understanding and awareness of ethical issues. Good literacy prevents mistakes and legal problems, while making sure AI delivers real value.

How AI literacy needs to be implemented

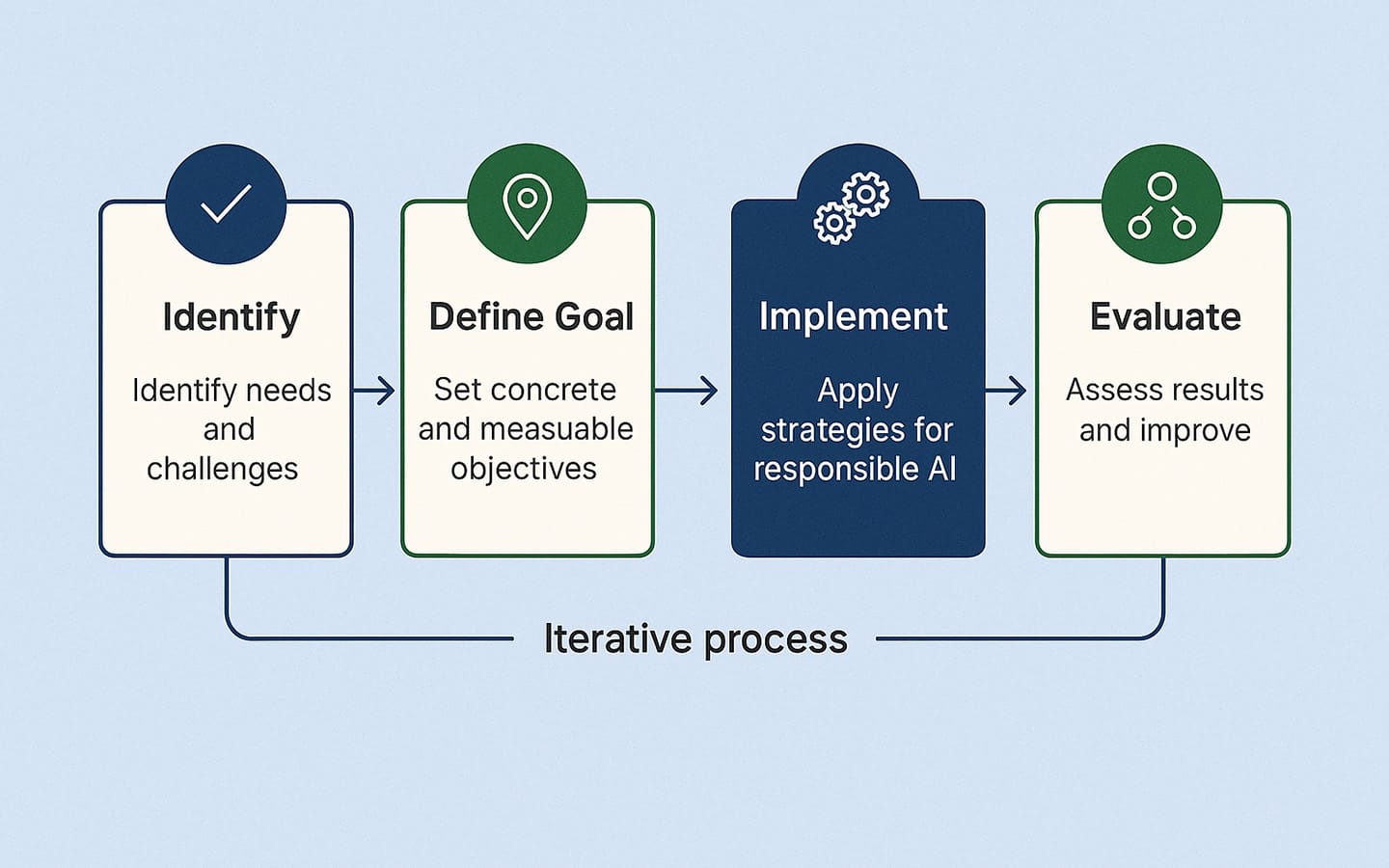

Building AI literacy in your organisation is not a one-time project, it’s an iterative process. This means you regularly revisit and improve them as your AI systems, regulations, and business needs change.

The AI literacy framework below helps you structure this journey into four stages: Identify, Define Goals, Implement, and Evaluate.

Step 1: Identify

Start by mapping your organisation’s needs and challenges with AI. Register all AI systems you use, from high-risk tools like recruitment screening to simple ones like chatbots. Check which regulations apply to each system and identify the knowledge and skill levels in your team.

Also list the risks and responsibilities linked to each AI tool. This step gives you a clear view of where AI is used, where the gaps are, and where the biggest opportunities lie.

Step 2: Define Goals

Set clear, measurable goals for improving AI literacy. Prioritise the most important risks and opportunities. An example of this is compliance for high-risk AI under the EU AI Act or better accuracy in customer service AI. Match your training needs to the specific tools and roles in your organisation.

Define who will be responsible for what, such as a compliance officer tracking regulatory changes or a developer leading explainable AI practices. This ensures your literacy efforts are focused and purposeful.

Step 3: Implement

Roll out strategies and actions to build AI literacy. This includes training and awareness sessions, governance practices, and making AI usage transparent to staff and customers. Use real-world examples (like showing how bias can creep into recruitment algorithms) and provide role-specific learning paths.

Create documents or guides that explain how your organisation uses AI and what checks are in place. Offer hands-on sessions so employees can safely experiment with AI tools.

Step 4: Evaluate

Regularly measure and improve your AI literacy program. Include AI literacy in periodic risk analyses and track whether your measures are effective. For example, re-test staff knowledge after training, or review whether AI errors are being caught more often.

Gather feedback from employees and adjust training based on their needs. Keep management reports to document progress, and remember this is an ongoing, iterative process. This means your AI literacy must evolve as technology and laws change.

Examples of AI literacy in practice

Implementing AI literacy can be challenging. While laws and policies set out the goals, they rarely explain how to put them into practice. The following examples show simple, practical ways to move from theory to everyday application.

Role-based AI literacy training

One effective way to build AI literacy is to adapt training to different roles in the organisation. Start by mapping your workforce into groups: leadership (strategic oversight), technical teams (development and maintenance), compliance/legal staff (regulation and risk), and frontline staff (day-to-day use).

Provide different levels of content, foundation courses on the basics of AI and AI ethics, intermediate sessions on sector-specific use cases, and advanced training on algorithm design. Use a mix of formats such as self-paced modules, workshops, and hands-on exercises.

Always link examples to the organization’s sector. For example, healthcare teams might focus on patient data privacy, finance teams on fair credit scoring. This approach works because it avoids a “one-size-fits-all” model and ensures everyone learns what’s most relevant to their role.

AI risk assessment as a literacy too

Another approach is to use AI risk assessments as a way to teach employees about AI. Create a simple framework and train staff to apply it when buying, building, or using AI.

Appoint “AI champions” in each department to guide assessments and act as literacy contacts. Keep a record of AI systems, using templates that note the purpose, risk level, context, and mitigation measures. Review these regularly to detect systems adopted outside governance (“shadow-AI”) and update risk measures.

This method makes learning practical by linking AI ethics and compliance directly to everyday technology decisions, while reducing the risk of misuse.

Multi-channel AI awareness campaigns

A third option is to run ongoing awareness campaigns that keep AI literacy visible. Use newsletters, intranet posts, short videos, podcasts, and internal blogs to share updates, success stories, and warnings. Add interactive elements like AI “office hours” with experts, quick quizzes, or small rewards for participation.

Adapt content for different audiences. For example, simple explainers for non-technical staff, regulatory updates for compliance teams. Time updates to match major events such as new AI deployments or policy changes.

Why is AI literacy important?

Over the past few years, AI has moved from niche research labs into mainstream apps, productivity tools, and public debates. That’s why the concept of AI literacy has become even more essential, for anyone who wants to navigate, question, and shape this technology responsibly.

1. Accountable use

When people understand how AI works, they can use it more responsibly. This means being aware of things like model bias, limitations of training data, and the difference between correlation and causation in AI predictions.

A journalist using an AI tool to draft an article should know that the model might “hallucinate” facts. With AI literacy, they’ll verify sources before publishing. Without it, misinformation can spread rapidly, damaging credibility and public trust.

2. Informed oversight

Leaders, policymakers, educators, and business owners with AI literacy can set meaningful rules and safeguards. If they understand how recommendation systems, facial recognition, or large language models work, they can make evidence-based policies rather than relying on marketing claims or fear.

An example of this could be a school board deciding whether to adopt an AI grading system needs to understand how the algorithm scores essays and whether it has cultural or linguistic biases. If they skip this due diligence, they might unknowingly disadvantage certain students.

3. Shared societal responsibility

The topic of responsible AI has become more important in the last years. This is because, AI systems shape society in subtle ways, what news is prioritized, which job applicants get interviews, which neighborhoods receive extra policing.

If AI literacy is concentrated in a small group of experts, the public loses the ability to hold those systems accountable. Broad literacy means more people can question, challenge, and improve AI’s role in daily life.